A new tussle between Microsoft and its partners that use Bing’s search index data has highlighted the company’s intensions of trying to lead the race for generative AI, experts say.

Earlier this week, Microsoft warned some of the partners it licenses its Bing Search Index data to about using Bing datasets to train their own generative AI engines or chatbots, according to a Bloomberg report.

“We’ve been in touch with partners who are out of compliance as we continue to consistently enforce our terms across the board. We’ll continue to work with them directly and provide any information needed to find a path forward,” a Microsoft spokesperson said.

Typically, smaller search engines such as Yahoo, You.com, Neeva and DuckDuckGo sign licensing agreements with Microsoft to use data from Bing’s search index. The data acts like a map of the internet and can be scanned by the smaller search engines when a query is directed at them to produce search results.

The smaller search engines refrain from making their own indexes to save time and money, according to experts.

Microsoft’s carrot-and-stick strategy

Why doesn’t Microsoft want smaller engines to use Bing’s search index data or APIs to train their own chatbots or AI engine? The simple answer, according to analysts, is the company’s carrot-and-stick strategy to maintain market lead in the AI race.

“In a way, one could view Microsoft’s implementation of OpenAI products or technology as any other means of market ownership and control, the same way we view market-leading development platform VS Code or Microsoft Office with their various technologies serving as both a carrot for consumers and as a stick for rivals (and even ecosystem partners),” said Omdia’s Chief Analyst Bradley Shimmin.

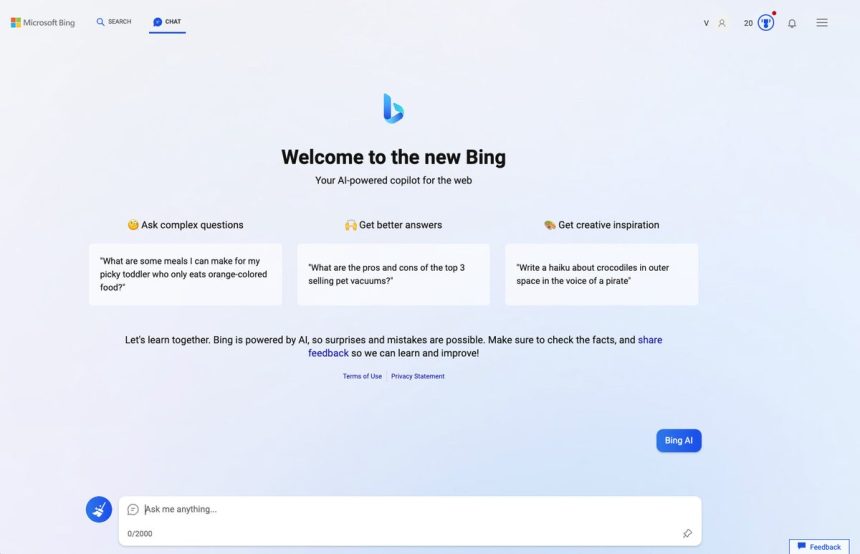

Microsoft added the technology driving ChatGPT to Bing in February.

The company’s fear is that smaller search engines might be able to replicate features of ChatGPT-driven chatbot in Bing to create their own products with the help of the data they get out of their licensing agreements and eat into Bing’s market share in the future, experts said.

“It is technically possible for smaller search engines to create their own versions of ChatGPT using Bing’s search index data along with other algorithms. The challenge for large language models (LLMs), which ChatGPT is based on, has been the need to ingest data and smaller search engines are getting this data ‘free’ from their existing arrangement,” said Constellation Research’s Principal Analyst Holger Mueller.

In addition, Microsoft might be viewing this as an infringement on the proprietary value of ChatGPT’s technology developed by OpenAI, according to Shimmin.

The data used to train ChatGPT “means a great deal”, according to Shimmin, as OpenAI needed to keep in mind several aspects in terms of how the consumer would use the technology, for example, fulfilling requests that depend on or are influenced by private, time-sensitive, and/or domain-specific data.

In order to create more factual responses, for example, in building GPT-4, OpenAI moved from using fully open data sets to incorporating both open and closed data sets, said Shimmin, adding that these closed data sets along with many other model nuances like model weightings, fine-tunings, even baseline prompt engineering, all go into making up the proprietary value of GPT-4 and other such models.

“In the case of Microsoft, OpenAI’s models are the commodity and Bing is but one of the delivery mechanisms, same as Office Copilot X, GitHub Copilot, who have their own medium that sees the world through the eyes of curated, partially closed LLM training assets,” Shimmin said.

However, the smaller search engines might be in breach of the terms of the contract, according to Mueller, as the use cases for how Bing’s search data can be used have been defined by Microsoft.

Also, there is enough evidence that may support Microsoft’s premonition in terms of several smaller engines such as You.com and Neeva offering their own chatbots, akin to Bing’s chatbot.

Large language models to influence the role of web index data

OpenAI’s strategy to train its AI engine with closed data systems might influence the future of search, according to Shimmin.

“It’s easy then to see how this closed system approach to LLMs will in turn influence the very role web index data plays in supporting the already emerging shift away from searching for websites to instead requesting insight from LLMs that build their results upon this valued commodity,” Shimmin said, adding that one should expect to see more proprietary intellectual property to emerge around these LLMs in support of both consumer and enterprise use cases.