Google today announced several upgrades to its search, translate and maps applications that use artificial intelligence (AI) and augmented reality (AR) to provide expanded answers and live search features that can instantaneously identify objects and locations around a user.

In at an event in Paris, the company demonstrated upgrades for its Google Maps Immersive View and Live View technology, along with new features for electric vehicle (EV) drivers and people who walk, bike or ride public transit that show 3D routes in real time. Live View, announced in 2020, allows users to get directions placed in the real world and on a mini map at the bottom of a mobile screen.

Google’s Immersive View, first previewed last year, is now live in five cities. An upgrade to the Google Maps navigation feature, Immersive View uses AI and AR to combine billions of street view and aerial images to create a digital model of the real world that can be used to guide users on a route. That feature is now available in London, Los Angeles, San Francisco, New York and Tokyo.

Search with Live View and Immersive View are designed to help a user find things close by — such as ATMs, restaurants, parks, and transit stations — just by lifting a phone while on the street. The features also offer helpful information, such as when a business is open, busy right now, and how highly it’s rated.

Google

GoogleAn example of Google search with Live View.

The Live View feature also allows aerial views. So, for example, users visiting the Rijksmuseum in Amsterdam could use it to virtually soar over the building and see entrance locations. And a “time slider” shows an area at different times of day and with weather forecasts.

“You can also spot where it tends to be most crowded so you can have all the information you need to decide where and when to go,” Google said. “If you’re hungry, glide down to the street level to explore nearby restaurants — and even take a look inside to quickly understand the vibe of a spot before you book your reservation.”

Google said the AR technology used to create the overlaid 3D maps can be especially helpful when navigating tricky places, such as an unfamiliar airport. In 2021, the company introduced indoor Live View for select locations in the US, Zurich and Tokyo for just that purpose. It uses AR-generated arrows to point a user to locations like restrooms, lounges, taxi stands, and car rentals.

Over the next few months, Google plans to expand indoor Live View to more than 1,000 new airports, train stations, and malls in Barcelona, Berlin, Frankfurt, London, Madrid, Melbourne, Paris, Prague, São Paulo, Singapore, Sydney, and Taipei.

To create the realistic scenes in Live View, Google uses neural radiance fields (NeRF), an advanced AI technology that transforms photos into 3D representations.

“With NeRF, we can accurately recreate the full context of a place including its lighting, the texture of materials and what’s in the background. All of this allows you to see if a bar’s moody lighting is the right vibe for a date night or if the views at a cafe make it the ideal spot for lunch with friends,” Google said.

Immersive View with Google’s Live View technology also allows a user to use their mobile device’s camera to experience a neighborhood, landmark, restaurant or popular venue before going inside. So, for example, pointing it at a store allows a person to “enter” the store and walk around without even going inside. The feature also identifies landmarks, not only in real time, but also on saved video.

Google

GoogleGoogle’s Live View allows a user’s mobile device camera to discover information about landmarks, such as buildings, not only in real-time video but also in saved clips.

Last year, Google launched a feature called multisearch in the US. It allows for search using text and images at the same time.

Google

GoogleGoogle launched multisearch in the US in 2022 and said it is now available worldwide.

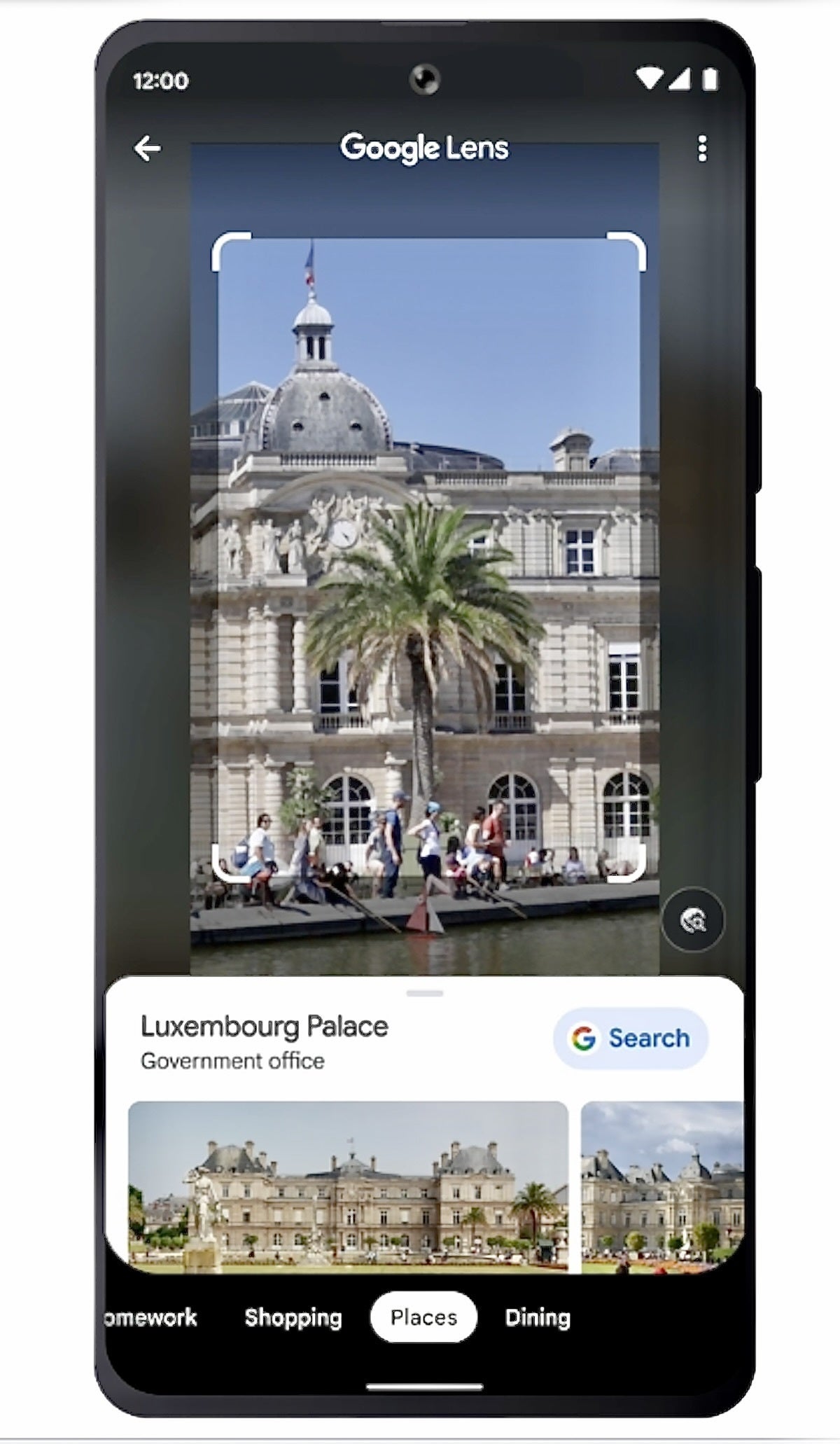

Today, Google announced Multisearch is live globally on mobile devices, in all languages and countries where Google Lens is available. It also announced “multisearch near me,” which allows users to snap a picture or take a screenshot of an object, such as a couch or oven, and then find the merchandise nearby. The company plans to launch “multisearch near me” over the next few months in all languages and countries where Lens is available.

Google’s app updates were timed to compete with announcements by Microsoft on Tuesday about enhancements to its Bing search engine. Microsoft, through its partnership with OpenAI, launched upgrades to both Bing and the Edge browser, allowing users to chat or text with the search engine and receive essay-like answers similar to what ChatGPT produces.

“While Google spoke about the promise of AI and demo’ed parts of it (not necessarily on the core search), and the capabilities being in ‘limited preview,’ Microsoft announced and showcased deeper integration of AI features into the Bing search and Edge browser, had us play around with it, and talked in detail about the Responsible AI steps that they have taken so far and plan to take on an ongoing basis,” said Ritu Jyoti, group IDC”s vice president of Worldwide Artificial Intelligence and Automation Research.

“Microsoft’s event announcing new Bing on the Edge browser was about one thing: getting more search users,” said Jason Wong, a distinguished vice president and software design and development analyst with Gartner Research. “The Microsoft investor presentation that followed indicated that a 1% uplift in search could lead to $2 billion in revenue for their advertising business.”

What Google announced today was about showing that a mere chatbot interface on top of a search engine will be table stakes, Wong said.

“Search is more than just typing phrases or questions in a browser. The need for real-time, contextual answers and information spans our daily activities. We are just beginning to reimagine the last 25 years of the web through generative AI,” Wong said.

Google also announced upgrades to its Translate app, providing users with more context. For example, if a user were to ask what are the best constellations for stargazing, after receiving a list, the user could ask about the best time of year to see them.

Google Translate also provides the option to see multiple meanings for questions and help a user find the best translation. The update will start rolling out in English, French, German, Japanese, and Spanish in the coming weeks.

“Search will never be a solved problem. Search is our biggest moonshot,” said Prabhakar Raghavan, Google’s senior vice president in charge of search, assistant and geo.

Google

GooglePrabhakar Raghavan, Google’s senior vice president in charge of search, assistant and geo, speaking at the company’s Paris event.

Announced in 2016, Google Translate uses a proprietary technology called Google Neural Machine Translation (GNMT) and Zero-Shot Translation to provide word-for-word conversion between language pairs never seen explicitly by the AI system. So, if someone speaks a sentence in English, the application can transpose it into any of 133 languages.

Google also announced a partnership and strategic stake in a competitor to OpenAI, and launched its own chatbot called Bard. Bard is an experimental, conversational AI service that Google said is powered by a technology called Language Model for Dialogue Applications (or LaMDA for short).

Google opened up Bard to beta testers this week ahead of making it more widely available soon.

Wong said Google was caught off guard by the success of ChatGPT, which reached one million users in just five days.

“Many people will likely compare Bing’s ChatGPT responses to Google Bard’s answers just to see what the differences are. Without a mobile presence for this new Bing chatbot interface, it will likely be hard for Microsoft to make serious inroads into the consumer search business,” Wong said.